Helpful Concepts & Terminology

Faraday is primarily used with LLaMa 2 models that have been fine-tuned for conversations.

These models have been trained to continue a back-and-forth dialogue between two or more parties. The model generates text until it is about to start the user’s response, at which point it stops and allows you to add the user’s side of the chat.

Base Models

LLaMa

LLaMa is a large language model (LLM) developed by Meta. Recently, Meta released LLaMa2, a new and improved base model that is supported on Faraday.

The base model comes in several sizes, each one with a different number of parameters: 7B, 13B, 33B and 65B. A higher parameter count means more nuanced and accurate responses, while also requiring significantly more processing power.

Other Base Models

Other base models have been released that use the same architecture as LLaMa.

- Mistral is a 7B base model that has largely replaced LLama 2 7B.

- Yi is another powerful base model that comes in multiple parameter sizes.

These base models are supported on Faraday.

What is fine-tuning?

Fine-tuned models extend the capabilities of their base model. They are trained (via curated datasets) to generate text in a specific style, such as instruction-following, programming assistance, or roleplay.

Each model in the Faraday marketplace is a fine-tuned model (the base model is never run directly). Each one has been trained on conversational datasets by various third parties.

Tokens

Large Language Models (LLM)s generate text by calculating which words are most likely to come next based on a given input sequence. This calculation requires that words be converted to numbers, i.e. "tokens". A token is approximately 3-4 letters.

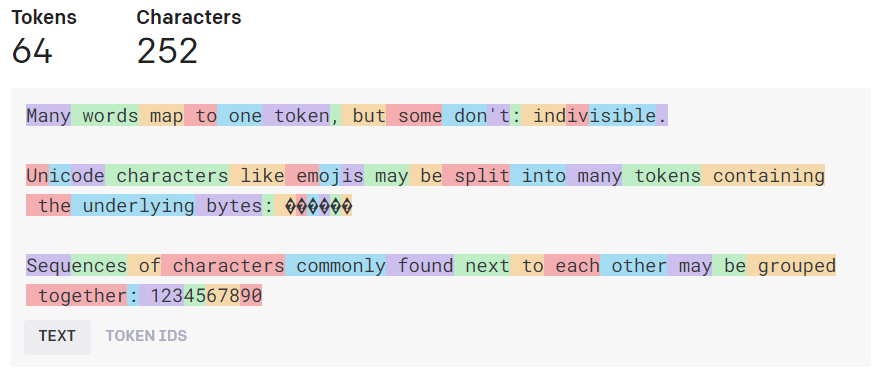

Here is a visualization of text broken into tokens:

Context

The exact set of tokens processed when generating the next token is called the "context".

Models can only process a certain amount of context at once. LLaMa 1-based models are limited to a maximum of 2048 tokens, while LLaMa 2-based models can take in up to 4096 tokens.

Context Window

When your conversation history starts to exceed the context window, the older messages are removed incrementally from the model context. If you find the model forgetting something you talked about an hour ago, this is why.